Activation function is nothing but a mathematical function that takes in an input and produces an output.

The function is activated when the computed result **reaches the specified threshold.**

> a mathematical operation that normalises the input and produces an output. The output is then passed forward onto the neurons on the subsequent layer.

### Activation Function Thresholds

- Pre-defined numerical values in the function. This nature can add non-linearity to the output

- Non-linear problems are those where there is no direct linear relationship between the input and output.

### Activation Function Types

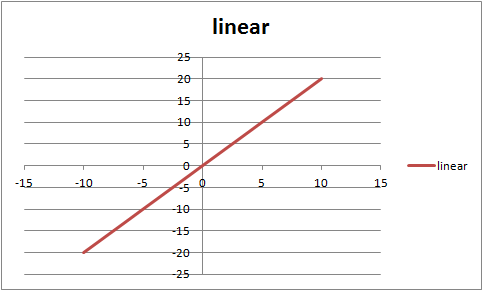

1. Linear Activation Function

1. Sigmoid Activation Function

The sigmoid activation function is “S” shaped. It can add non-linearity to the output and returns a binary value of 0 or 1.

_If your output is going to be either 0 or 1 then simply use the sigmoid activation function._

3. Tanh Activation Function

Tanh is an extension of the sigmoid activation function. Hence Tanh can be used to add non-linearity to the output. The output is within the range of -1 to 1

4. Rectified Linear Unit Activation Function (RELU)

RELU is one of the most used activation functions. It is preferred to use RELU in the hidden layer. The concept is very straight forward. It also adds non-linearity to the output. However the result can range from 0 to infinity.

5. Softmax Activation Function

Softmax is an extension of the Sigmoid activation function. Softmax function adds non-linearity to the output, however it is mainly used for classification examples where multiple classes of results can be computed.